반응형

이 포스팅은 아래의 유튜브 채널 "따배"를 따라서 학습한 내용입니다.

[관련 이론]

- N/A

[Precondition]

(1) 테스트 환경

(1.1) Rocky Linux Cluster

: 직접 구성

[root@k8s-master ~]# k get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready control-plane 30d v1.27.2 192.168.56.30 <none> Rocky Linux 8.10 (Green Obsidian) 4.18.0-553.33.1.el8_10.x86_64 containerd://1.6.32

k8s-node1 Ready <none> 30d v1.27.2 192.168.56.31 <none> Rocky Linux 8.8 (Green Obsidian) 4.18.0-477.10.1.el8_8.x86_64 containerd://1.6.21

k8s-node2 Ready <none> 30d v1.27.2 192.168.56.32 <none> Rocky Linux 8.8 (Green Obsidian) 4.18.0-477.10.1.el8_8.x86_64 containerd://1.6.21

[root@k8s-master ~]#

(1.2) Ubuntu Cluster

: kodekloud 테스트 환경 활용

controlplane ~ ➜ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

controlplane Ready control-plane 9m6s v1.31.0 192.6.94.6 <none> Ubuntu 22.04.4 LTS 5.4.0-1106-gcp containerd://1.6.26

node01 Ready <none> 8m31s v1.31.0 192.6.94.9 <none> Ubuntu 22.04.4 LTS 5.4.0-1106-gcp containerd://1.6.26

https://learn.kodekloud.com/user/courses/udemy-labs-certified-kubernetes-administrator-with-practice-tests

(2) 사전 필요 설정

- N/A

[Question#2]

A kubernetes worker node, named kh8s-w2 is in state NotReady.

Investigate why this is the case, and perform any appropriate steps to bbring the node to a Ready state,

ensuring that any changes are mate permanent.

[Solve]

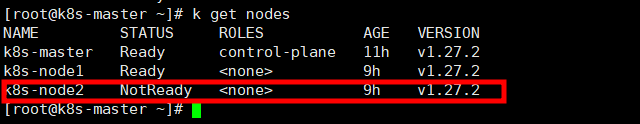

(1) node 상태 확인

: youtube 강좌와 환경 차이가 있음

: k8s-node2를 임의로 변경하여 테스트 진행함 /

-> k8s-node2에서 systemctl stop kubelet 으로 문제 상황을 조성함

[root@k8s-master ~]# k get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 9h v1.27.2

k8s-node1 Ready <none> 7h22m v1.27.2

k8s-node2 Ready <none> 7h22m v1.27.2

[root@k8s-master ~]#

[root@k8s-master ~]#

[root@k8s-master ~]#

[root@k8s-master ~]# ssh k8s-node2

The authenticity of host 'k8s-node2 (192.168.56.32)' can't be established.

ECDSA key fingerprint is SHA256:7yKRDib2g5F02C9PdZN2kCRP7voub0GneUGVeb2JL2M.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'k8s-node2,192.168.56.32' (ECDSA) to the list of known hosts.

root@k8s-node2's password:

Last login: Fri Jan 31 15:55:10 2025 from 192.168.56.1

[root@k8s-node2 ~]# systemctl status kubelet.service

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Fri 2025-01-31 15:55:15 KST; 9h ago

Docs: https://kubernetes.io/docs/

Main PID: 31171 (kubelet)

Tasks: 11 (limit: 18865)

Memory: 69.7M

CGroup: /system.slice/kubelet.service

└─31171 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.con>

Jan 31 22:07:46 k8s-node2 kubelet[31171]: E0131 22:07:46.631723 31171 pod_workers.go:1294] "Error syncing pod, skipping" err="failed to >

Jan 31 22:07:58 k8s-node2 kubelet[31171]: E0131 22:07:58.630734 31171 pod_workers.go:1294] "Error syncing pod, skipping" err="failed to >

Jan 31 22:08:17 k8s-node2 kubelet[31171]: I0131 22:08:17.850190 31171 pod_startup_latency_tracker.go:102] "Observed pod startup duration>

Jan 31 22:38:28 k8s-node2 kubelet[31171]: I0131 22:38:28.000300 31171 pod_startup_latency_tracker.go:102] "Observed pod startup duration>

Jan 31 22:38:28 k8s-node2 kubelet[31171]: I0131 22:38:28.000717 31171 topology_manager.go:212] "Topology Admit Handler"

Jan 31 22:38:28 k8s-node2 kubelet[31171]: E0131 22:38:28.000778 31171 cpu_manager.go:395] "RemoveStaleState: removing container" podUID=>

Jan 31 22:38:28 k8s-node2 kubelet[31171]: E0131 22:38:28.000786 31171 cpu_manager.go:395] "RemoveStaleState: removing container" podUID=>

Jan 31 22:38:28 k8s-node2 kubelet[31171]: I0131 22:38:28.000807 31171 memory_manager.go:346] "RemoveStaleState removing state" podUID="0>

Jan 31 22:38:28 k8s-node2 kubelet[31171]: I0131 22:38:28.161547 31171 reconciler_common.go:258] "operationExecutor.VerifyControllerAttac>

Jan 31 22:38:28 k8s-node2 kubelet[31171]: I0131 22:38:28.161586 31171 reconciler_common.go:258] "operationExecutor.VerifyControllerAttac>

[root@k8s-node2 ~]# systemctl stop kubelet

[root@k8s-node2 ~]# systemctl status kubelet.service

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: inactive (dead) since Sat 2025-02-01 01:09:46 KST; 2s ago

Docs: https://kubernetes.io/docs/

Process: 31171 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS (code=>

Main PID: 31171 (code=exited, status=0/SUCCESS)

Jan 31 22:38:28 k8s-node2 kubelet[31171]: I0131 22:38:28.000717 31171 topology_manager.go:212] "Topology Admit Handler"

Jan 31 22:38:28 k8s-node2 kubelet[31171]: E0131 22:38:28.000778 31171 cpu_manager.go:395] "RemoveStaleState: removing container" podUID=>

Jan 31 22:38:28 k8s-node2 kubelet[31171]: E0131 22:38:28.000786 31171 cpu_manager.go:395] "RemoveStaleState: removing container" podUID=>

Jan 31 22:38:28 k8s-node2 kubelet[31171]: I0131 22:38:28.000807 31171 memory_manager.go:346] "RemoveStaleState removing state" podUID="0>

Jan 31 22:38:28 k8s-node2 kubelet[31171]: I0131 22:38:28.161547 31171 reconciler_common.go:258] "operationExecutor.VerifyControllerAttac>

Jan 31 22:38:28 k8s-node2 kubelet[31171]: I0131 22:38:28.161586 31171 reconciler_common.go:258] "operationExecutor.VerifyControllerAttac>

Feb 01 01:09:46 k8s-node2 systemd[1]: Stopping kubelet: The Kubernetes Node Agent...

Feb 01 01:09:46 k8s-node2 kubelet[31171]: I0201 01:09:46.760881 31171 dynamic_cafile_content.go:171] "Shutting down controller" name="cl>

Feb 01 01:09:46 k8s-node2 systemd[1]: kubelet.service: Succeeded.

Feb 01 01:09:46 k8s-node2 systemd[1]: Stopped kubelet: The Kubernetes Node Agent.

[root@k8s-node2 ~]# exit

logout

Connection to k8s-node2 closed.

[root@k8s-master ~]#

[root@k8s-master ~]#

[root@k8s-master ~]# k get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 11h v1.27.2

k8s-node1 Ready <none> 9h v1.27.2

k8s-node2 Ready <none> 9h v1.27.2

[root@k8s-master ~]# k get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 11h v1.27.2

k8s-node1 Ready <none> 9h v1.27.2

k8s-node2 Ready <none> 9h v1.27.2

[root@k8s-master ~]# k get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 11h v1.27.2

k8s-node1 Ready <none> 9h v1.27.2

k8s-node2 NotReady <none> 9h v1.27.2

[root@k8s-master ~]#

: 위와 같이 k8s-node2에 접속하여 kubelet을 stop 후 k8s-node2는 NotReady 상태로 변경됨

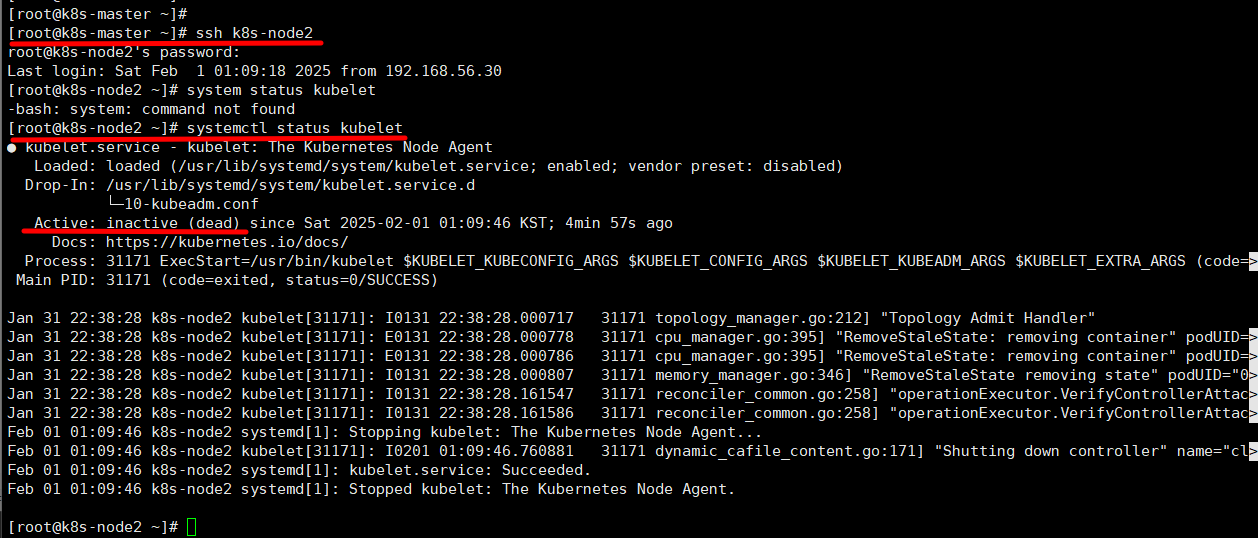

(2) kubelet 상태 확인

: 문제가 발생한 node에 접속하여 kubelet 상태 확인

: 내가 발생시킨 문제지만,,,,

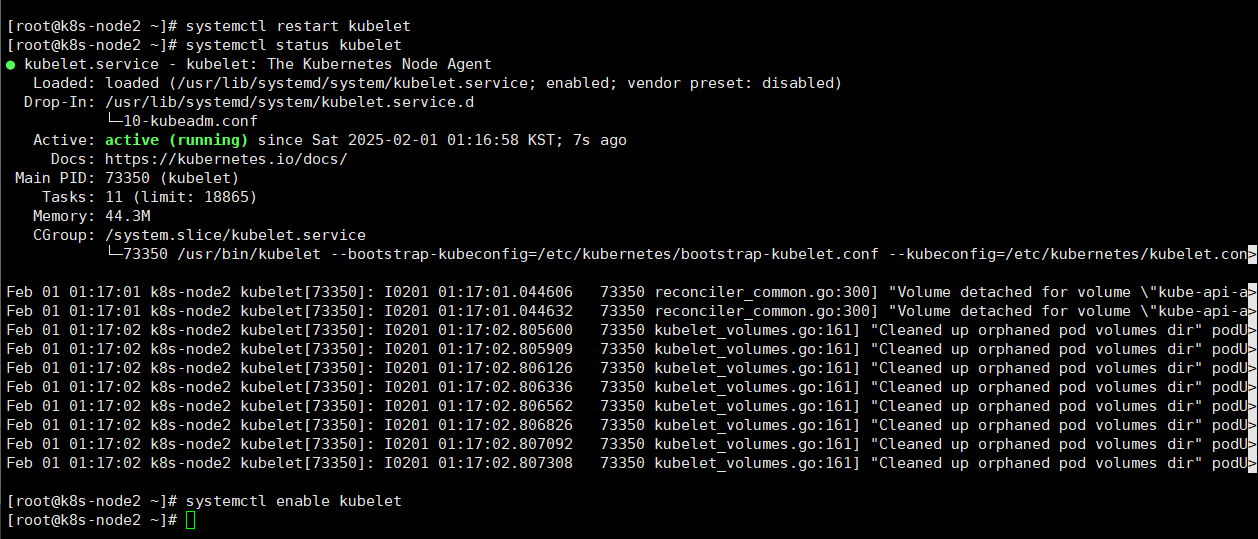

(3) kubelet enable 및 restart

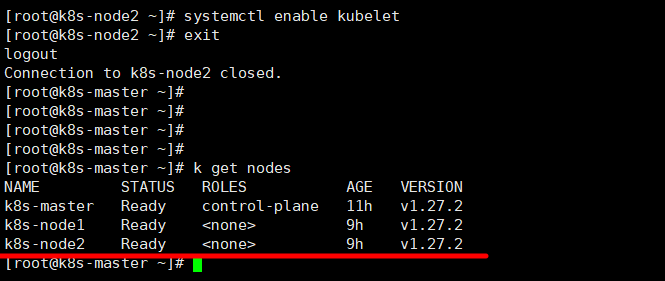

(4) 문제가 있던 node가 복구되었는지 확인

반응형

'Compute > kubernetis' 카테고리의 다른 글

| [따배씨] 26. User Cluster Role Binding / CKA 시험 문제 학습 (0) | 2025.02.01 |

|---|---|

| [따배씨] 25. User Role Binding / CKA 시험 문제 학습 (0) | 2025.02.01 |

| [따배씨] 22. Kubernetes Upgrade / CKA 시험 문제 학습 (0) | 2025.02.01 |

| [따배씨] 21. Check Resource Information / --sort-by / CKA 시험 문제 학습 (0) | 2025.02.01 |

| [따배씨] 20. Persistent Volume Claim을 사용하는 Pod 운영 / CKA 시험 문제 학습 (0) | 2025.02.01 |